All-in-One

Digital Marketing Platform

at Your Fingertips

Elevate your brand effortlessly using our unified platform, designed for simplicity and effectiveness.

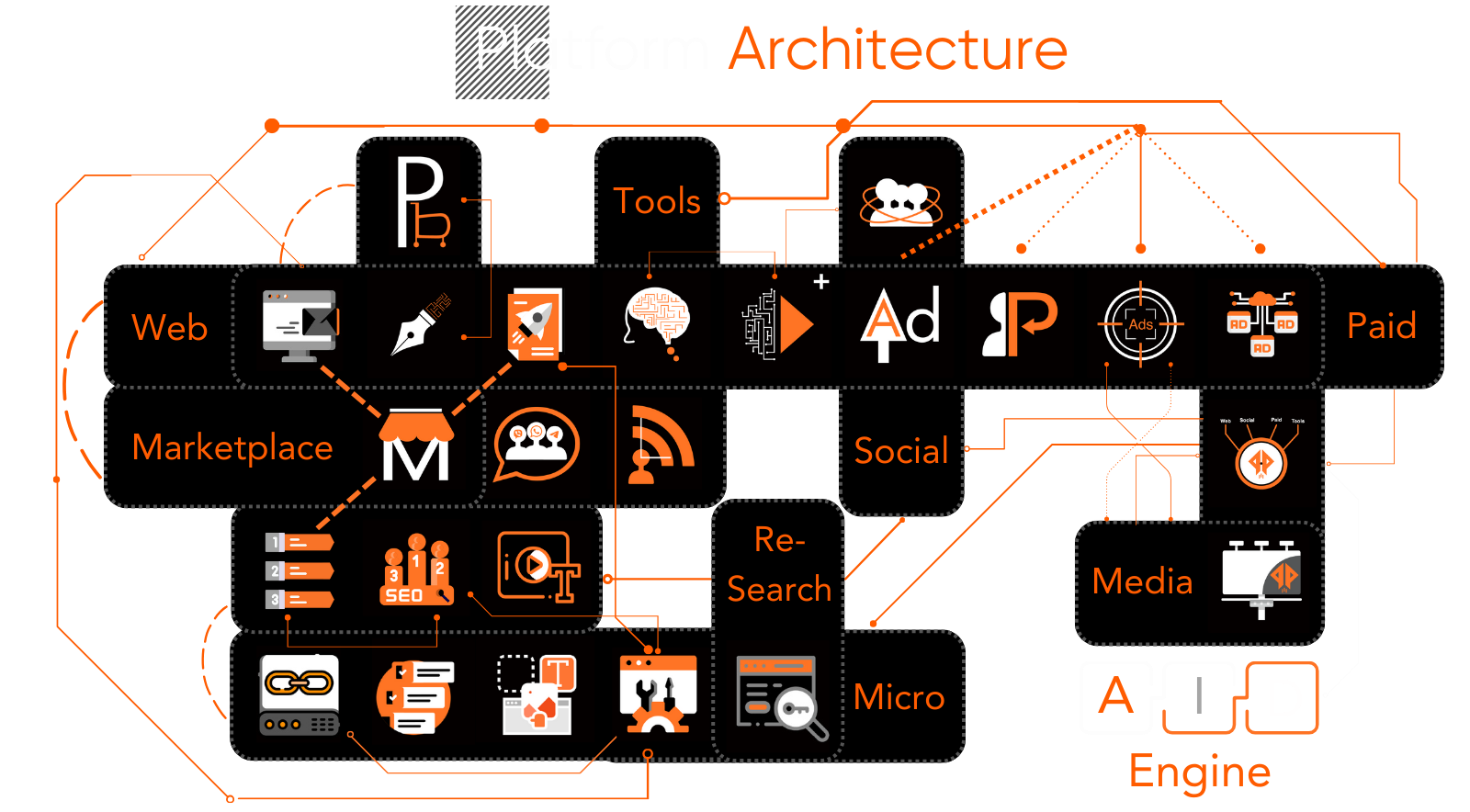

Our comprehensive solution encompasses web, social media, programmatic, paid marketing services, research tools and marketplace.

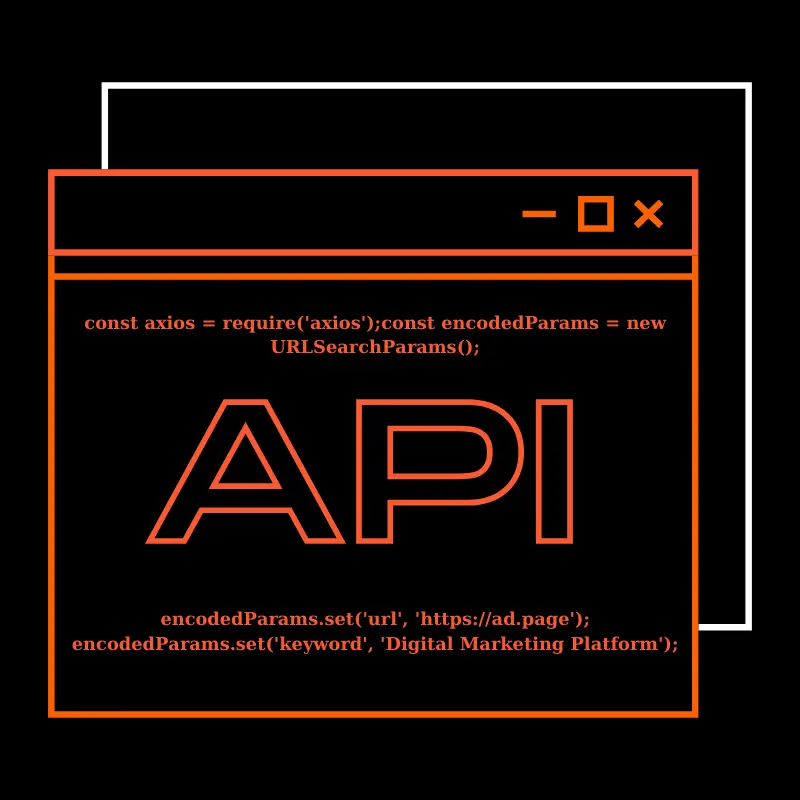

Enhance your business’s reach with our advanced APIs, part of the most sophisticated digital marketing platform available.

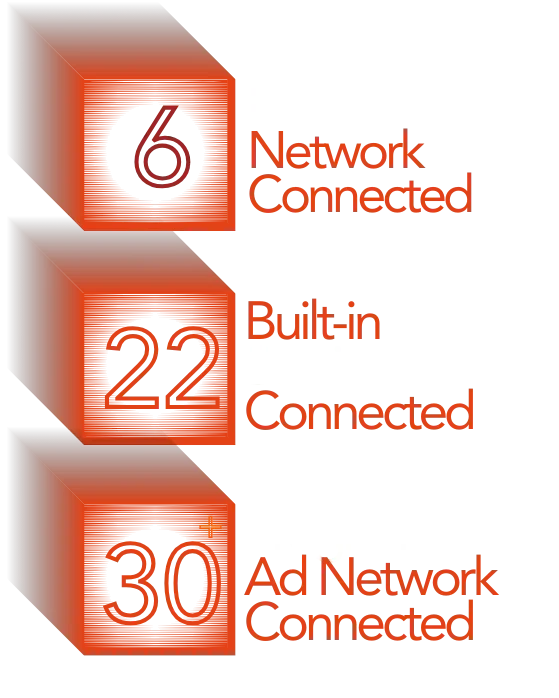

Access a Wide Range of Digital Marketing Channels with One Registration

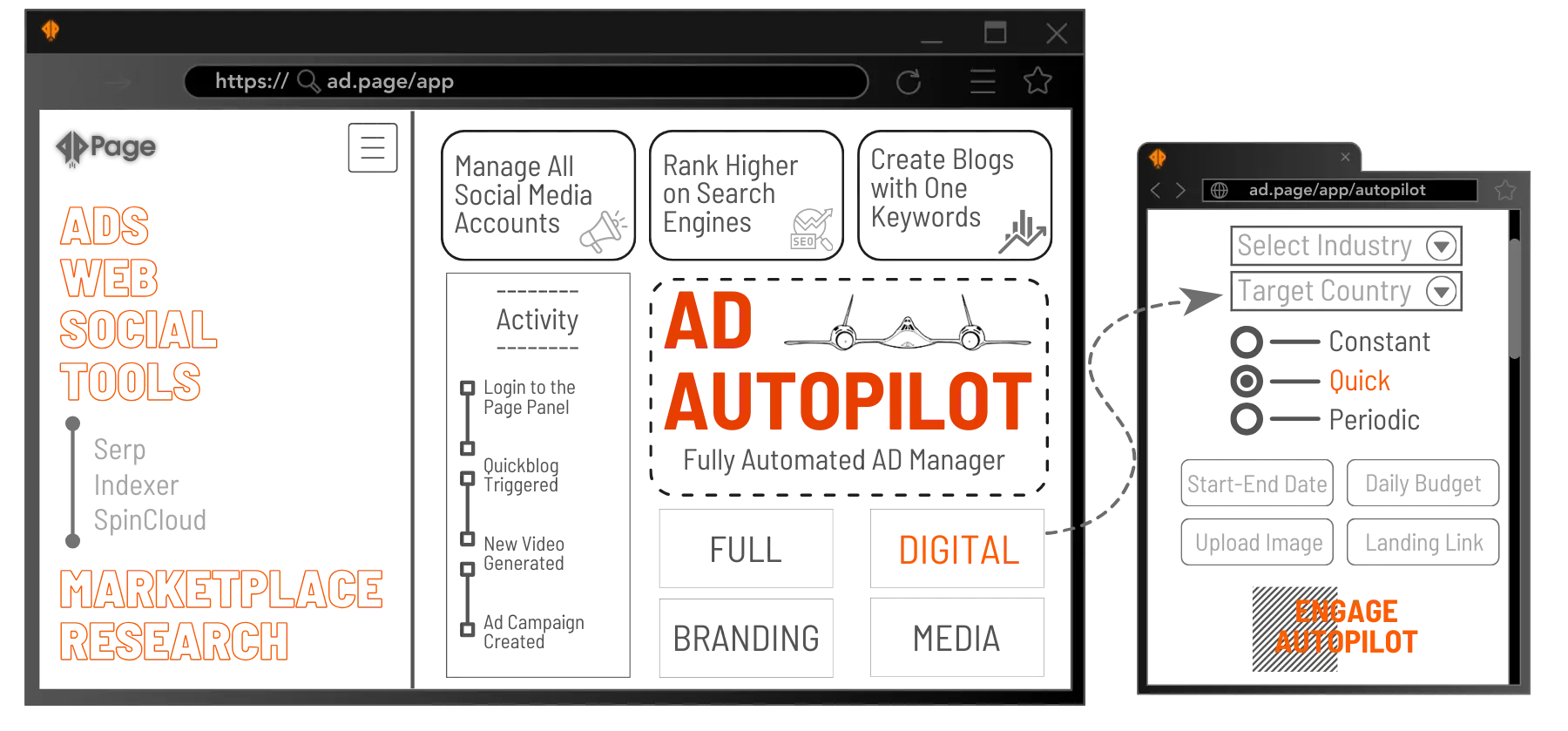

Platform Features

Platform Usage

Every Page service either being used from the Web Dashboard, Mobile or API suite gives users access to the entire tools and services.

Pay-As-You-Go

Pricing

Page Ads’ pay-as-you-go model optimizes ad spend by charging for essential usage only, eliminating unnecessary subscription-based monthly costs, making it a top choice for cost-effective advertising solutions. With this model, you only pay for what you use, on your Digital Marketing Campaigns.

API

The API provides connectivity to the Page API, allowing seamless integration and interaction with Page Ads tools and services.

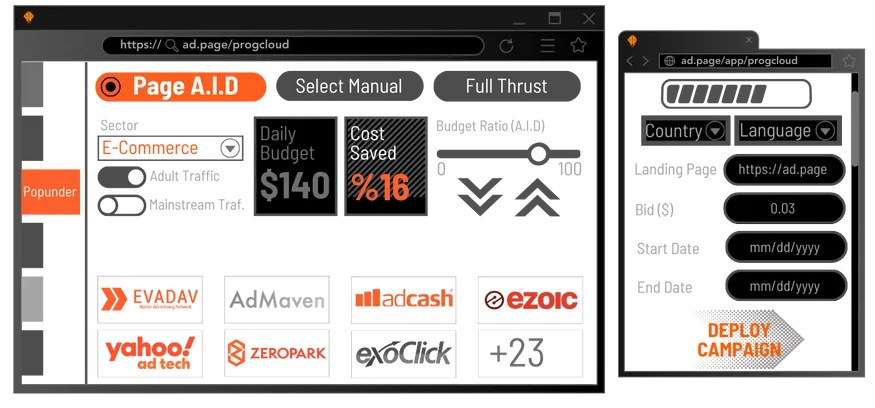

A.I.D Engine

Ad Intelligence Database (A.I.D) is designed to eliminate the guesswork and manual labor traditionally associated with campaign optimization. Users can confidently navigate the advertising landscape, making informed decisions that lead to superior campaign performance and maximized ROI.

Cross Funnels

Pre Set-up & Connected Marketing Funnels

Page Mash Ups is a streamlined marketing funnel that connects different channels into one interface, simplifying tasks like video processing, social media management, ad campaigns, and more, to boost efficiency and engagement with just a push of a button.

- No Ad Account Needed

- All Sectors Accepted for Marketing

- Autonomous Campaign Manager

- 13 Ad Types

- Audience Targeting

- Auto Budget Manager

- Average %17 Cheaper

- 20x Faster Ad Placement

Prog Cloud

Access, Deploy and Manage over 30 Programmatic Ads platforms from one centralized platform.

Web Works

Sub-services include Page Ads/Monetizre for advertising, Page Social for one-click social media connectivity solutions, and PageSoft for custom integration needs for complex requests.

Quickblog

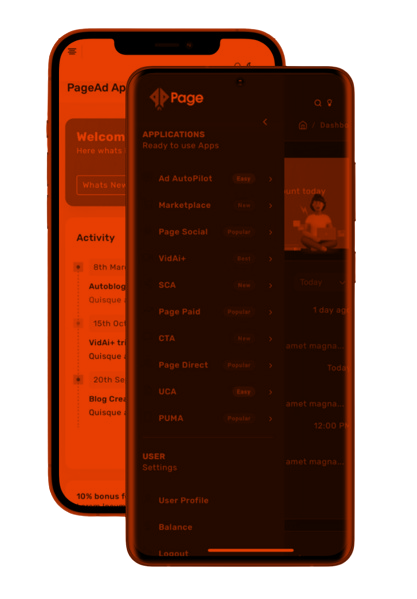

Get Page App

You can manage your massive campaign through a single app.

- Access All Page Tools and Services

- Manage Entire Advertising Campaign

- Push Notifications for Desired Updates